summing up 112

summing up is a recurring series of interesting articles, talks and insights on culture & technology that compose a large part of my thinking and work. Drop your email in the box below to get it – and much more – straight in your inbox.

Preventing the Collapse of Civilization, by Jonathan Blow

My thesis is that software is actually in decline right now. I don't think most people would believe me if I say that, it sure seems like it's flourishing. So I have to convince you at least that this is a plausible perspective.

What I'll say about that is that collapses, like the Bronze Age collapse, were massive. All civilizations were destroyed, but it took a hundred years. So if you're at the beginning of that collapse, in the first 20 years you might think "Well things aren't as good as they were 20 years ago but it's fine".

Of course I expect the reply to what I'm saying to be "You're crazy! Software is doing great, look at all these internet companies that are making all this money and changing the way that we live!" I would say yes, that is all happening. But what is really happening is that software has been free riding on hardware for the past many decades. Software gets "better" because it has better hardware to run on.

Our technology is accelerating at a frightening rate, a rate beyond our understanding of its impact. We spend millions of dollars and countless hours researching newer, faster tools, but we haven’t bothered to research the most fundamental, strategic issues that will provide the highest payoffs for augmenting our abilities.

Andreessen's Corollary: Ethical Dilemmas in Software Engineering, by Bryan Cantrill

I think that the key with ethics is not answers. Don't seek answers. Seek to ask questions. Tough questions. Questions that may make people feel very uncomfortable. Questions that won't necessarily have nice, neat answers. These questions are going to be complicated, but it is the act of asking them, that allows us to consider them. If we don't ask them, we're going to simply do the wrong thing.

And I think that if you've got an organization that in which question asking is encouraged, I think you will find that you will increasingly do the right thing. That you are less likely, I think, to move adrift with respect to these principles.

Questions are more important than answers. Answers change over time and different circumstances, even for the same person, while questions endure.

21st Century Design, by Don Norman

Most of our technical fields who study systems leave out people, except there's some object in there called people. And every so often there are people who are supposed to do something to make sure the system works. But there's never any analysis of what it takes to do that, never any analysis of whether this is really an activity that's well suited for people, and when people are put into it or they burn out or they make errors etc., we blame the people instead of the way the system was built. Which doesn't at all take into account people's abilities and what we're really good at – and also what we're bad at.

Collaboration shows us that the world often isn’t zero-sum. It doesn't have to be humans versus technology, technology versus humans or humans versus other humans. Collaboration shows us that the whole is greater than the sum of its parts. And that collaboration is succeeding because of their differences, not despite.

summing up 111

summing up is a recurring series of interesting articles, talks and insights on culture & technology that compose a large part of my thinking and work. Drop your email in the box below to get it – and much more – straight in your inbox.

Socializing technology for the mobile human, by Bill Buxton

Everybody's into accelerators and incubators and wants to be a millionaire by the time they're 24 by doing the next big thing. So let me tell you what I think about the next big thing: there's no such thing as the next big thing! In fact chasing the next big thing is what is causing the problem.

That the next big thing isn't a thing. The next big thing is a change in the relationship amongst the things that are already there. Societies don't transform by making new things but by having their internal relationships change and develop.

I'd argue that what we know about sociology and how we think about things like kinship, moral order, social conventions, all of those things that we know about and have a language through social science apply equally to the technologies that we must start making. If we don't have that into our mindset, we're just gonna make a bunch of gadgets, a bunch of doodads, as opposed to build an ecosystem that's worthy of human aspirations. And actually technological potential.

We’re living in the present and we’ve forgotten that true innovation is about system transformation, not just a linear forward progression. That distinction is key to understanding the problem.

Privacy Rights and Data Collection in a Digital Economy, by Maciej Cegłowski

The internet economy today resembles the earliest days of the nuclear industry. We have a technology of unprecedented potential, we have made glowing promises about how it will transform the daily lives of our fellow Americans, but we don’t know how to keep its dangerous byproducts safe.

There is no deep reason that weds the commercial internet to a business model of blanket surveillance. The spirit of innovation is not dead in Silicon Valley, and there are other ways we can grow our digital economy that will maintain our lead in information technology, while also safeguarding our liberty. Just like the creation of the internet itself, the effort to put it on a safer foundation will require a combination of research, entrepreneurial drive and timely, enlightened regulation. But we did it before, and there’s no reason to think we can’t do it again.

No technology is entirely positive or even neutral. Every technology is both a burden and a blessing. It is never a matter of either/or – it will always be both. And we must ask with urgency, is whether we're gonna manage the machine or whether it will manage us.

Notes on AI Bias, by Benedict Evans

I often think that the term ‘artificial intelligence’ is deeply unhelpful in conversations like this. It creates the largely false impression that we have actually created, well, intelligence – that we are somehow on a path to HAL 9000 or Skynet – towards something that actually understands. We aren’t. These are just machines, and it’s much more useful to compare them to, say, a washing machine. A washing machine is much better than a human at washing clothes, but if you put dishes in a washing machine instead of clothes and press start, it will wash them. They’ll even get clean. But this won’t be the result you were looking for, and it won’t be because the system is biased against dishes. A washing machine doesn’t know what clothes or dishes are - it’s just a piece of automation, and it is not conceptually very different from any previous wave of automation.

That is, just as for cars, or aircraft, or databases, these systems can be both extremely powerful and extremely limited, and depend entirely on how they’re used by people, and on how well or badly intentioned and how educated or ignorant people are of how these systems work.

Is it really about making machines and tools smarter and more intelligent? Or about augmenting the individual to be smarter or to be more productive? Maybe we should aim for something different: contributing to raising our collective intelligence. Because that is the intelligence we're part of, that shapes us as we shape it, that defines our culture and ultimately the borders of our world.

summing up 110

summing up is a recurring series of interesting articles, talks and insights on culture & technology that compose a large part of my thinking and work. Drop your email in the box below to get it – and much more – straight in your inbox.

Defining the Dimensions of the “Space” of Computing, by Weiwei Hsu

Traditionally, we have thought of computing not in terms of a space of alternatives but in terms of improvements over time. Moore’s Law. Faster. Cheaper. More processors. More RAM. More mega-pixels. More resolution. More sensors. More bandwidth. More devices. More apps. More users. More data. More “engagement.” More everything.

While trending technologies dominate tech news and influence what we believe is possible and probable, we are free to choose. We don’t have to accept what monopolies offer. We can still inform and create the future on our own terms. We can return to the values that drove the personal computer revolution and inspired the first-generation Internet.

Glass rectangles and black cylinders are not the future. We can imagine other possible futures — paths not taken — by searching within a “space of alternative” computing systems. In this “space,” even though some dimensions are currently less recognizable than others, by investigating and hence illuminating the less-explored dimensions together, we can co-create alternative futures.

It's difficult to suspend our current view of how technology shapes our world, and to imagine something completely new or different. We never paused and asked whether there was a way to build from a better, different blueprint instead of building on top of the existing technology. One of the most thoughtful pieces I've read lately.

Hypertext and Our Collective Destiny, by Tim Berners-Lee

It is a good time to sit back and consider to what extent we have actually made life easier. We have access to information: but have we been solving problems? Well, there are many things it is much easier for individuals today than 5 years ago. Personally I don't feel that the web has made great strides in helping us work as a global team.

Perhaps I should explain where I'm coming from. I had (and still have) a dream that the web could be less of a television channel and more of an interactive sea of shared knowledge. I imagine it immersing us as a warm, friendly environment made of the things we and our friends have seen, heard, believe or have figured out. I would like it to bring our friends and colleagues closer, in that by working on this knowledge together we can come to better understandings. If misunderstandings are the cause of many of the world's woes, then can we not work them out in cyberspace. And, having worked them out, we leave for those who follow a trail of our reasoning and assumptions for them to adopt, or correct.

Technology does not and cannot solve humanity's problems. We can enable, augment, and improve with technology, but ultimately humans have to deal with human problems.

Rebuilding the Typographic Society, by Matthew Butterick

Now and then there’s a bigger event—let’s call it a Godzilla moment—that causes a lot of destruction. And what is the Godzilla? Usually the Godzilla is technology. Technology arrives, and it wants to displace us—take over something that we were doing. That’s okay when technology removes a burden or an annoyance.

But sometimes, when technology does that, it can constrict the space we have for expressing our humanity. Then, we have to look for new outlets for ourselves, or what happens? What happens is that this zone of humanity keeps getting smaller. Technology invites us to accept those smaller boundaries, because it’s convenient. It’s relaxing. But if we do that long enough, what’s going to happen is we’re going to stagnate. We’re going to forget what we’re capable of, because we’re just playing in this really tiny territory.

The good news is that when Godzilla burns down the city with his fiery breath, we have space to rebuild. There’s an opportunity for us. But we can’t be lazy about it.

Technology should not replace humans, but it should play out its real strength, which is amplifying human capabilities. And once we understand how technology works, we can begin to focus on improving its quality, creating tools that truly make things cheaper, faster, and better without destroying the very fabric of our humanity.

summing up 109

summing up is a recurring series of interesting articles, talks and insights on culture & technology that compose a large part of my thinking and work. Drop your email in the box below to get it – and much more – straight in your inbox.

What the Hell is Going On? by David Perell

Like fish in water, we’re blind to how the technological environment shapes our behavior. The invisible environment we inhabit falls beneath the threshold of perception. Everything we do and think is shaped by the technologies we build and implement. When we alter the flow of information through society, we should expect radical transformations in commerce, education, and politics.

By understanding information flows, we gain a measure of control over them. Understanding how shifts in information flow impact society is the first step towards building a better world, so we can make technology work for us, not against us.

We shape our technological environment and our technological environment shapes us. But if we're blind to that change, how can we go against the negative effects and augment the positive ones?

The Long Nose of Innovation, by Bill Buxton

What the Long Nose tells us is that any technology that is going to have significant impact in the next 10 years is already at least 10 years old. Any technology that is going to have significant impact in the next 5 years is already at least 15 years old, and likely still below the radar. Hence, beware of anyone arguing for some “new” idea that is “going to” take off in the next 5 years, unless they can trace its history back for 15. If they cannot do so, most likely they are either wrong, or have not done their homework

The Long Nose redirects our focus from the “Edison Myth of original invention”, which is akin to an alchemist making gold. It helps us understand that the heart of the innovation process has far more to do with prospecting, mining, refining, goldsmithing, and of course, financing.

It's such an interesting notion, that technology innovation is not that fast moving thing it seems to be. Rather, technological change takes time, revolutionary change even more so. As the saying goes, it takes years to become famous over night.

WTF and the importance of human/tool co-evolution, by Tim O'Reilly

I think one of the big shifts for the 21st century is to change our sense of what collective intelligence is. Because we think of it as somehow the individual being augmented to be smarter, to make better decisions, maybe to work with other people in a more productive way. But in fact many of the tools of collective intelligence we are contributing to and the intelligence is outside of us. We are part of it and we are feeding into it.

It changes who we are, how we think. Shapes us as we shape it. And the question is whether we're gonna manage the machine or whether it will manage us. Now we tell ourselves in Silicon Valley that we're in charge. But you know, we basically built this machine, we think we know what it's going to do and it suddenly turns out not quite the way we expected.

We have to think about that fundamental goal that we give these systems. Because, yes there is all this intelligence, this new co-evolution and combination of human and machine, but ultimately it's driven by what we tell it to optimize for.

So much in computing is optimized for the single user. The personal computer, the smartphone, but also apps and most of our infrastructure. These days almost every room is equipped with electricity, light and buttons to control it. But just imagine a world, where everyone would carry a flashlight in their pockets, seeing only one thing at a time, charge it up every night, buy a new version every two years, and having one hand always busy. How small and lonely that world would be.

summing up 108

summing up is a recurring series of interesting articles, talks and insights on culture & technology that compose a large part of my thinking and work. Drop your email in the box below to get it – and much more – straight in your inbox.

Big Idea Famine, by Nicholas Negroponte

I believe that 30 years from now people will look back at the beginning of our century and wonder what we were doing and thinking about big, hard, long-term problems, particularly those of basic research. They will read books and articles written by us in which we congratulate ourselves about being innovative. The self-portraits we paint today show a disruptive and creative society, characterized by entrepreneurship, start-ups and big company research advertised as moonshots. Our great-grandchildren are certain to read about our accomplishments, all the companies started, and all the money made. At the same time, they will experience the unfortunate knock-on effects of an historical (by then) famine of big thinking.

We live in a dog-eat-dog society that emphasizes short-term competition over long-term collaboration. We think in terms of winning, not in terms of what might be beneficial for society. Kids aspire to be Mark Zuckerberg, not Alan Turing.

Ask yourself: What ideas, spaces and lifestyles will you leave behind for your grandchildren?

Forget privacy: you're terrible at targeting anyway, by Avery Pennarun

The state of personalized recommendations is surprisingly terrible. At this point, the top recommendation is always a clickbait rage-creating article about movie stars or whatever Trump did or didn't do in the last 6 hours. That's not what I want to read or to watch, but I sometimes get sucked in anyway, and then it's recommendation apocalypse time, because the algorithm now thinks I like reading about Trump, and now everything is Trump. Never give positive feedback to an AI.

This is, by the way, the dirty secret of the machine learning movement: almost everything produced by ML could have been produced, more cheaply, using a very dumb heuristic you coded up by hand, because mostly the ML is trained by feeding it examples of what humans did while following a very dumb heuristic.

There's no magic here. If you use ML to teach a computer how to sort through resumes, it will recommend you interview people with male, white-sounding names, because it turns out that's what your HR department already does. If you ask it what video a person like you wants to see next, it will recommend some political propaganda crap, because 50% of the time 90% of the people do watch that next, because they can't help themselves, and that's a pretty good success rate.

There's lots of talk about advancements in artificial intelligence or machine learning, but very little about their shortcomings and effects on society. Surrounded by hysteria, mistaken extrapolations, limited imagination and many more mistakes we're distracted from thinking productively about our future.

The Bomb in the Garden, by Matthew Butterick

Now, you may say “hey, but the web has gotten so much better looking over 20 years.” And that’s true. But on the other hand, I don’t really feel like that’s the right benchmark, unless you think that the highest role of design is to make things pretty. I don’t.

I think of design excellence as a principle. A principle that asks this: Are you maximizing the possibilities of the medium?

That’s what it should mean. Because otherwise it’s too easy to congratulate ourselves for doing nothing. Because tools & technologies are always getting better. They expand the possibilities for us. So we have to ask ourselves: are we keeping up?

We somehow think, technology becomes better because it gets faster. But that is simply confusing technology consumption for technology innovation. Consumption simply allows us to do more of the same, while innovation augments us to do things that were previously impossible.

summing up 107

summing up is a recurring series of interesting articles, talks and insights on culture & technology that compose a large part of my thinking and work. Drop your email in the box below to get it – and much more – straight in your inbox.

The Best Way to Predict the Future is to Create It. But Is It Already Too Late? by Alan Kay

Albert Einstein's quote "We cannot solve our problems with the same levels of thinking that we used to create them" is one of my favorite quotes. I like this idea because Einstein is suggesting something qualitative. That it is not doing more of what we're doing. It means if we've done things with technology that have gotten us in a bit of a pickle, doing more things with technology at the same level of thinking is probably gonna make things worse.

And there is a corollary with this: if your thinking abilities are below threshold you are going to be in real trouble because you will not realize until you have done yourself in.

Virtually everybody in the computing science has almost no sense of human history and context of where we are and where we are going. So I think of much of the stuff that has been done as inverse vandalism. Inverse vandalism is making things just because you can.

One important thing here is to not get trapped by our bad brains, by our thinking abilities. We're limited in this regard, and only tools, methods, habits and understanding⋅can help us to learn to see and finally get to a higher level of thinking.

Don’t be seduced by the pornography of change, by Mark Ritson

Marketing is a fascinating discipline in that most people who practice it have no idea about its origins and foundations, little clue about how to do the job properly in the present, but unbounded enthusiasm to speculate about the future and what it will bring. If marketers became doctors they would spend their time telling patients not what ailed them, but showing them an article about the future of robotic surgery in the year 2030. If they took over as accountants they would advise clients to forget about their current tax returns because within 50 years income will become obsolete thanks to lasers and 3D printing.

There are probably two good reasons for this obsession with the future over the practical reality of the present. First, marketing has always managed to attract a significant proportion of people who are attracted to the shiny stuff. Second, ambitious and overstated projections in the future are fantastic at garnering headlines and hits but have the handy advantage of being impossible to fact check.

If your job is to talk about what speech recognition or artificial intelligence will mean for marketing then you have an inherent desire to make it, and you, as important as possible. Marketers take their foot from the brake pedal of reality and put all their pressure on the accelerator of horseshit in order to get noticed, and future predictions provide the ideal place to drive as fast as possible.

And this does not only apply to marketing. Many fields today, computing & technology included, are currently obsessing about a revolutionary potential that has always been vastly, vastly overhyped. The hype surrounding these topics is sometimes so pervasive that raising skepticism can often be seen as one's failure to recognize that the hype is deserved.

Design in the Era of the Algorithm, by Josh Clark

Let’s not codify the past. On the surface, you’d think that removing humans from a situation might eliminate racism or stereotypes or any very human bias. In fact, the very real risk is that we’ll seal our bias—our past history—into the very operating system itself.

Our data comes from the flawed world we live in. In the realms of hiring and promotion, the historical data hardly favors women or people of color. In the cases of predictive policing, or repeat-offender risk algorithms, the data is both unfair and unkind to black men. The data bias codifies the ugly aspects of our past.

Rooting out this kind of bias is hard and slippery work. Biases are deeply held—and often invisible to us. We have to work hard to be conscious of our unconscious—and doubly so when it creeps into data sets. This is a data-science problem, certainly, but it’s also a design problem

The problem with data is not only the inherited bias in the data set, but also in algorithms that treat data as unbiased facts and humans believing in the objectivity of the results. Biases are only the extreme cases that make these problems visible, but the deeper issue is that we prevent ourselves to interpret and study nature and thereby defining our limits of interpretation.

summing up 106

summing up is a recurring series of interesting articles, talks and insights on culture & technology that compose a large part of my thinking and work. Drop your email in the box below to get it – and much more – straight in your inbox.

The "Next Big Thing" is a Room, by Steve Krouse

Our computers have lured us into a cage of our own making. We’ve reduced ourselves to disembodied minds, strained eyes, and twitching, clicking, typing fingertips. Gone are our arms and legs, back, torsos, feet, toes, noses, mouths, palms, and ears. When we are doing our jobs, our vaunted knowledge work, we are a sliver of ourselves. The rest of us hangs on uselessly until we leave the office and go home.

Worse than pulling us away from our bodies, our devices have ripped us from each other. Where are our eyes when we speak with our friends, walk down the street, lay in bed, drive our cars? We know where they should be, and yet we also know where they end up much of the time. The tiny rectangles in our pockets have grabbed our attention almost completely.

These days almost every room is equipped with electricity, light and buttons to control it. It's so common that we hardly trouble ourselves thinking about it. It is not a device we carry around in our pockets, have to charge up every night and buy a new version every two years – like a flashlight. Imagine how small and lonely a world like this would be, where everyone carries his own, personal flashlight, seeing only one thing at a time and having one hand always busy.

Machine Teaching, Machine Learning, and the History of the Future of Public Education, by Audrey Watters

Teaching machines were going to change everything. Educational television was going to change everything. Virtual reality was going to change everything. The Internet was going to change everything. The Macintosh computer was going to change everything. The iPad was going to change everything. And on and on and on.

Needless to say, movies haven’t replaced textbooks. Computers and YouTube videos haven’t replaced teachers. The Internet has not dismantled the university or the school house. Not for lack of trying, no doubt. And it might be the trying that we should focus on as much as the technology.

The transformational, revolutionary potential of these technologies has always been vastly, vastly overhyped. And it isn’t simply that it’s because educators or parents are resistant to change. It’s surely in part because the claims that marketers make are often just simply untrue.

The hype surrounding our technologies is sometimes so pervasive that raising skepticism can often be seen as one's failure to recognize that the hype is deserved. This is the game we're playing. It's no longer about the real transformational power, about real change & potential, but mostly about a superficial pop culture.

What We Actually Know About Software Development, and Why We Believe It’s True, by Greg Wilson

Think about how much has changed. Some things have changed without recognition, sadly the way we build software hasn't. There is nothing you do day by day that wouldn't have been familiar to me 25 years ago. Yes, you're using more powerful machines, yes you're using browsers and all this other stuff. But the way you work day to day has not improved.

Most of software development today is based on myth, superstition or arrogance. And this won't change until we're willing to be humble enough to admit when we're wrong. Only then we can find out how the world actually works and do things based on that knowledge.

summing up 105 - the mother of all demos

Today, exactly 50 years ago, a man invented the future.

If you've been following my writing, talks and ideas you've certainly heard his name: Doug Engelbart.

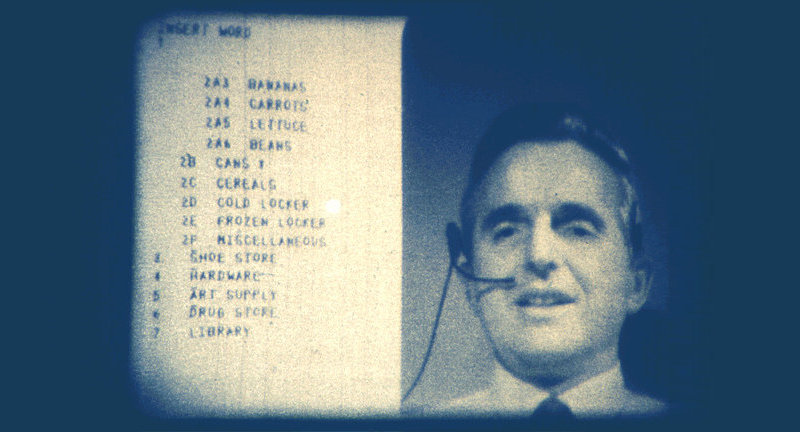

On 9th December 1968, he and his team demonstrated the prototype of his vision at the Fall Joint Computer Conference in San Francisco in front of about 1,000 computer professionals.

This demo introduced so many key concepts we still use today: the computer mouse, windows, graphics, video conferencing, word processing, copy & paste, hypertext, revision control, a collaborative real-time editor and much more. No wonder it's also known as the Mother of all Demos.

What's so striking about Engelbart's demo however isn't how much has changed since then, but how many things have stayed the same.

To celebrate this somewhat special day, I want to deviate a bit from my usual format and highlight some of his key ideas which impress me to this day.

The Mother of all Demos, which I alluded to earlier, is certainly one of the most important pieces of our computer history. If you can spare some time this holiday season, I can only commend to watch parts of this demo. It was a jaw-dropping experience for me. And a testament to what can happen when you get a bunch intelligent people together and ask them to invent the future.

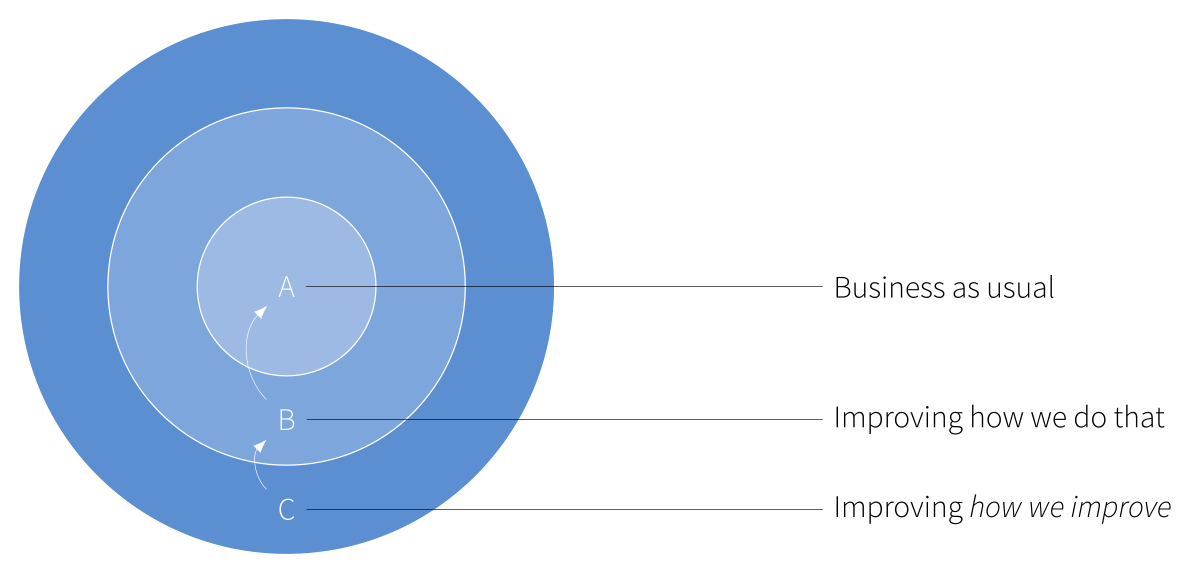

The ABCs of Organizational Improvement is a framework I rely heavily on when working with clients. It depicts three types of basic activities which should be ongoing in any healthy business:

(A) Business as usual: Processes you can find in every business and include the core activities, such as developing a product, manufacturing, marketing, sales etc. It is all about execution and carrying out today's strategy.

(B) Improving how we do that: Thinking about how to improve the ability to perform A. This includes training, hiring, adopting new tools & processes, workflows or bringing in external consultants.

(C) Improving how we improve: How can we improve how we improve? How can we get better at inventing better processes in B? It's this part most businesses struggle with, but at the same time brings the most value. This kind of meta-thinking is the shift from an incremental to an exponential improvement and ultimately the advancement of the business as a whole.

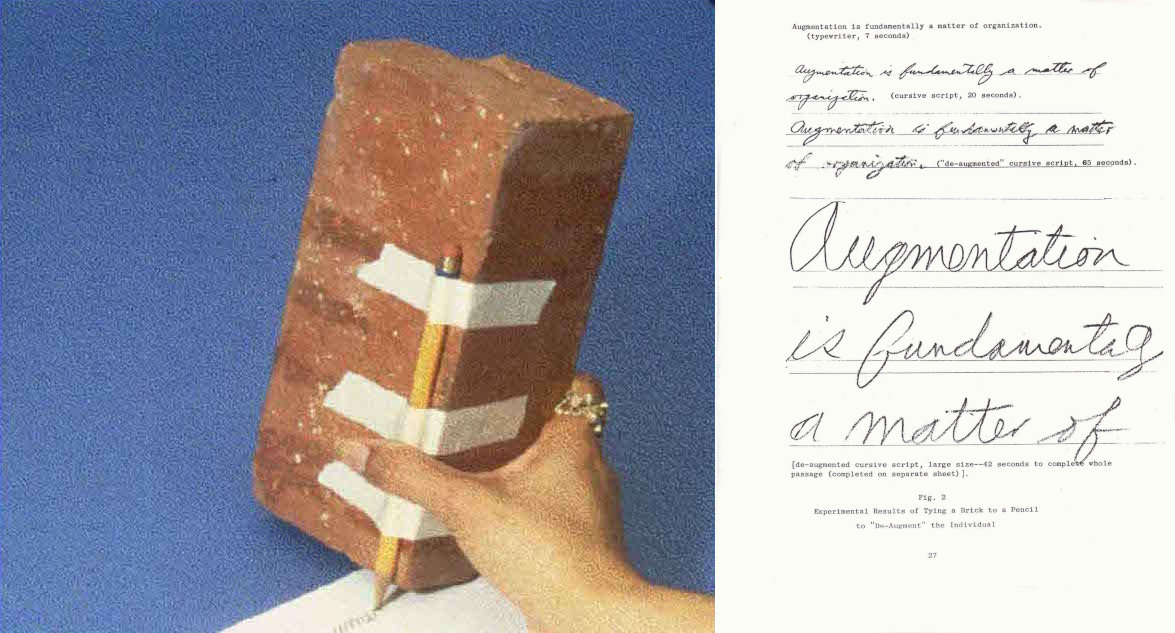

Augmenting Human Intellect: A Conceptual Framework lays down Engelbart's fundamental vision. In there you can find his famous example of taping a pencil to a brick and thereby significantly slowing down the ability to write. When you make it harder to do the lower parts of an activity, it becomes almost impossible to do the higher parts of an activity – like exploring ideas, structuring your thoughts & ideas or to distill the essence of something to the essential. Our tools influence the thoughts we can think, and bad tools interfere with thinking well.

Engelbart's vision went much further, as he intended to augment human intellect and enable people to think in powerful new ways, to collectively solve urgent global problems. To really understand what he means by that, you have to forget today. You have to forget everything you know about computers. You have to forget everything you think you know about computers. His vision is not about computers, it's about us and the future of mankind:

Technology should not aim to replace humans, rather amplify human capabilities.

Engelbart’s vision & philosophy continues to influence many technologists today, myself included, I hope I could explain why.

summing up 104

summing up is a recurring series of interesting articles, talks and insights on culture & technology that compose a large part of my thinking and work. drop your email in the box below to get it – and much more – straight in your inbox.

People don't change, by Peter Gasston

People – I think – don't change that much. What changes over time are cultural differences and values, but people have the same goals, the same desires and the same urges.

Technology matches our desires, it doesn't make them. People haven't become more vain because now we have cameras. Cameras have been invented and they became popular because we've always been a bit vain, we've always wanted to see ourselves. It's just the technology was never in place to enable that expression of our characters before.

The more I study history the more I understand that people from different cultures, people from different historical periods... we're not exceptional, there's nothing exceptional about us, there's nothing exceptional about them. The technology might be new, but the way we react to it, the way we use it, is the same it always has been.

Whatever we think about ourselves, we aren't more intelligent than our ancestors. Neither were they more intelligent than we are. But technology and knowledge plays it's role in augmenting us – and that is what makes us better.

Education That Takes Us To The 22nd Century, by Alan Kay

When we get fluent in powerful ideas, they are like adding new brain tissue that nature didn't give us. It's worthwhile thinking about what it means to get fluent in something like calculus and to realize that a normal person fluent in calculus can outthink Archimedes. If you're fluent at reading you can cover more ground than anybody in the antiquity could in an oral culture.

So a good question for people who are dealing with computing is what if what's important about computing is deeply hidden? I can tell you as far as this one, most of the computing that is done in most of industry completely misses most of what's interesting about computing. They are basically at a first level of exposure to it and they're trying to optimize that. Think about that because that was okay fifty years ago.

Probably the most important thing I can urge on you today is to try and understand that computing is not exactly what you think it is. You have to understand this. What happened when the internet got done and a few other things back in the 70s or so was a big paradigm shift in computing and it hasn't spilled out yet. But if you're looking ahead to the 22nd century this is what you have to understand otherwise you're always going to be steering by looking in the rearview mirror.

If someone today could outthink Archimedes and anyone who is literate can cover more ground than any oral culture... What can someone do with a computer today? The most interesting point is that it isn't as much as we think. We keep mouthing platitudes about innovation and pretend we're much more advanced than our ancestors. But the more you look at what computing can really be about, the more pathetic everything we're doing right now sounds.

Why History Matters, by Audrey Watters

“Technology is changing faster than ever” – this is a related, repeated claim. It’s a claim that seems to be based on history, one that suggests that, in the past, technological changes were slow; now, they’re happening so fast and we’re adopting new technologies so quickly – or so the story goes – that we can no longer make any sense of what is happening around us, and we’re just all being swept along in a wave of techno-inevitability.

Needless to say, I don’t think the claim is true – or at the very least, it is a highly debatable one. Some of this, I’d argue, is simply a matter of confusing technology consumption for technology innovation. Some of this is a matter of confusing upgrades for breakthroughs – Apple releasing a new iPhone every year might not be the best rationale for insisting we are experiencing rapid technological change. Moreover, much of the pace of change can be accounted for by the fact that many new technologies are built atop – quite literally – pre-existing systems: railroads followed the canals; telegraphs followed the railroads; telephones followed the telegraphs; cable television followed the phone lines...

So why then does the history of tech matter? It matters because it helps us think about beliefs and practices and systems and institutions and ideology. It helps make visible, I’d hope, some of the things that time and familiarity has made invisible. It helps us think about context. It helps us think about continuity as much as change. And I think it helps us be more attuned to the storytelling and the myth-making that happens so frequently in technology and reform circles.

We're confusing technology consumption for technology innovation. Innovation augments ourselves to do things that were previously impossible, consumption just allows us to do more of the same. Maybe better, faster of whatever, but still the same.

summing up 103

summing up is a recurring series on digital strategy topics & insights that compose a large part of my thinking and work. drop your email in the box below to get it – and much more – straight in your inbox.

Rethinking CS Education, by Alan Kay

If you want to get something done, the way you do it is not so much trying to convince somebody but to create a tribe that is a conspiracy. Because anthropologically that is what we are more than any other thing. We are tribal beings and we tend to automatically oppose things outside of our tribe, even if they're good ideas, because that isn't the way we think – in fact we don't think we're not primarily thinking animals.

The theatrical part of this is lots bigger than we think, the limitations are much smaller than we think and the relationship we have with our heritage is that we are much more different than we think we are. I hate to see computing and computer science watered down to some terrible kind of engineering that the Babylonians might have failed at.

That is pathetic! And I'm saying it in this strong way because you need to realize that we're in the middle of a complete form of bullshit that has grown up out of the pop culture.

We're stuck in conversations around hypes and trending technological topics. At the same time our world gets ever more complex and throws ever more complex problems at us. I really hope that we can grow up soon and use the power the computer grants us to actually augment ourselves.

Neither Paper Nor Digital Does Active Reading Well, by Baldur Bjarnason

A recurring theme in software development is the more you dig into the research the greater the distance is between what actual research seems to say versus what the industry practices.

Develop a familiarity with, for example, Alan Kay’s or Douglas Engelbart’s visions for the future of computing and you are guaranteed to become thoroughly dissatisfied with the limitations of every modern OS. Reading up hypertext theory and research, especially on hypertext as a medium, is a recipe for becoming annoyed at The Web. Catching up on usability research throughout the years makes you want to smash your laptop agains the wall in anger. And trying to fill out forms online makes you scream ‘it doesn’t have to be this way!’ at the top of your lungs.

That software development doesn’t deal with research or attempts to get at hard facts is endemic to the industry.

It seems crazy to me that most other subjects look at their history, while computing mostly ignores the past, thinking that new is always better. The problem these days isn't how to innovate, but how to get society to adopt the good ideas that already exist.

If Software Is Eating the World, What Will Come Out the Other End? by John Battelle

So far, it’s mostly shit. Most of our society simply isn’t benefiting from this trend of software eating the world. In fact, most of them live in the very world that software ate.

The world is not just software. The world is physics, it’s crying babies and shit on the sidewalk, it’s opioids and ecstasy, it’s car crashes and Senate hearings, lovers and philosophers, lost opportunities and spinning planets around untold stars. The world is still real.

Software – data, code, algorithms, processing – software has dressed the world in new infrastructure. But this is a conversation, not a process of digestion. It is a conversation between the physical and the digital, a synthesis we must master if we are to avoid terrible fates, and continue to embrace fantastic ones.

Only those who know nothing of the history of technology believe that a technology is entirely neutral. It always has implications, positive and negative. And all too often we seem to ignore the downsides of this in our physical world. The world we live in, and the technology as well.

summing up 102

summing up is a recurring series on topics & insights that compose a large part of my thinking and work. drop your email in the box below to get it – and much more – straight in your inbox.

The Cult of the Complex, by Jeffrey Zeldman

In an industry that extols innovation over customer satisfaction, and prefers algorithm to human judgement (forgetting that every algorithm has human bias in its DNA), perhaps it should not surprise us that toolchains have replaced know-how.

Likewise, in a field where young straight white dudes take an overwhelming majority of the jobs (including most of the management jobs) it’s perhaps to be expected that web making has lately become something of a dick measuring competition.

It was not always this way, and it needn’t stay this way. If we wish to get back to the business of quietly improving people’s lives, one thoughtful interaction at a time, we must rid ourselves of the cult of the complex. Admitting the problem is the first step in solving it.

Solutions to many problems seem most brilliant when they appear most obvious. Simple even. But in many cases we throw everything we have against the wall and see what sticks. It's on us to recognize when we forget that our job is to solve business, client and most importantly human problems.

The Relativity of Wrong, by Isaac Asimov

In every century people have thought they understood the universe at last, and in every century they were proved to be wrong. It follows that the one thing we can say about our modern "knowledge" is that it is wrong.

The basic trouble, you see, is that people think that "right" and "wrong" are absolute; that everything that isn't perfectly and completely right is totally and equally wrong. However, I don't think that's so. It seems to me that right and wrong are fuzzy concepts.

A very interesting thought which reminds me very much of a short poem by Piet Hein: The road to wisdom? — Well, it's plain and simple to express: Err and err and err again but less and less and less.

Known Unknowns, by James Bridle

Technology does not emerge from a vacuum; it is the reification of the beliefs and desires of its creators. It is assembled from ideas and fantasies developed through evolution and culture, pedagogy and debate, endlessly entangled and enfolded. The belief in an objective schism between technology and the world is nonsense, and one that has very real outcomes.

Cooperation between human and machine turns out to be a more potent strategy than trusting to the computer alone.

This strategy of cooperation, drawing on the respective skills of human and machine rather than pitting one against the other, may be our only hope for surviving life among machines whose thought processes are unknowable to us. Nonhuman intelligence is a reality—it is rapidly outstripping human performance in many disciplines, and the results stand to be catastrophically destructive to our working lives. These technologies are becoming ubiquitous in everyday devices, and we do not have the option of retreating from or renouncing them. We cannot opt out of contemporary technology any more than we can reject our neighbors in society; we are all entangled.

As we envision, plan and build technology, a human bias will always be part of it. We can't just pass our responsibility to technology and bury our head in the sand. The question we should and have to pose ourselves is be a different one. How can we use and leverage technology as a tool, as ways to augment ourselves to do things that were previously impossible? I think collaboration and cooperation might be an answer.

summing up 101

summing up is a recurring series on topics & insights that compose a large part of my thinking and work. Drop your email in the box below to get it – and much more – straight in your inbox.

The "Space" of Computing, by Weiwei Hsu

Today, we have been building and investing so much of our time into the digital world and we have forgotten to take a step back and take a look at the larger picture. Not only do we waste other people's time by making them addicted to this device world, we have also created a lot of waste in the real world. At the same time we're drowning in piles and piles of information because we never took the time to architect a system that enable us in navigating through them. We're trapped in these rectangular screens and we have often forgotten how to interact with the real world, with real humans. We have been building and hustling - but hey, we can also slow down and rethink how we want to dwell in both the physical world and the digital world.

At some point in the future we will leave this world and what we'll leave behind are spaces and lifestyles that we've shaped for our grandchildren. So I would like to invite you to think about what do we want to leave behind, as we continue to build both digitally and physically. Can we be more intentional so that we shape and leave behind a more humane environment?

What we use a computer for on a daily basis, is only a small part of what a computer could offer us. Instead, most of our conversation evolves around hypes and trending technological topics. What we desperately need is to take a step back, and figure out ways of thinking to tackle complex problems in a ever more complex world.

Pace Layering: How Complex Systems Learn and Keep Learning, by Stewart Brand

Fast learns, slow remembers. Fast proposes, slow disposes. Fast is discontinuous, slow is continuous. Fast and small instructs slow and big by accrued innovation and by occasional revolution. Slow and big controls small and fast by constraint and constancy. Fast gets all our attention, slow has all the power.

All durable dynamic systems have this sort of structure. It is what makes them adaptable and robust.

The total effect of the pace layers is that they provide a many-leveled corrective, stabilizing feedback throughout the system. It is precisely in the apparent contradictions between the pace layers that civilization finds its surest health.

We're too often thinking about the superficial, the fast, the shallow. And that is not necessarily a bad thing - but it will easily become if it's the only thing we do. This concept is one of those that, once your brain has been exposed, you start seeing everywhere.

The Garden and the Stream: A Technopastoral, by Mike Caulfield

I find it hard to communicate with a lot of technologists anymore. It’s like trying to explain literature to someone who has never read a book. You’re asked “So basically a book is just words someone said written down?” And you say no, it’s more than that. But how is it more than that?

I am going to make the argument that the predominant form of the social web — that amalgam of blogging, Twitter, Facebook, forums, Reddit, Instagram — is an impoverished model for learning and research and that our survival as a species depends on us getting past the sweet, salty fat of “the web as conversation” and on to something more timeless, integrative, iterative, something less personal and less self-assertive, something more solitary yet more connected. I don’t expect to convince many of you, but I’ll take what I can get.

We can imagine a world that is so much better than this one. And more importantly we can build it. But in order to do that we have to think bigger than the next hype, the next buzzword and the next press release. We have to seriously interrogate the assumptions that are hidden in plain sight.

summing up 100

Wow. After sharing and discussing close to a thousand (964 to be precise) articles, talks, essays, videos and links, my summing up column turns 100.

I originally started this series a little over five years ago to keep track on what I was reading. Little did I know then how much this effort helped me build up a large part of my expertise, methods, strategies and way of thinking. I'm also quite relieved that in all that time, nobody asked me about the swedish conspiracy.

To celebrate this somewhat special occasion, I want to deviate a bit from the usual format and highlight some key figures and favourite articles which impress me to this day.

Doug Engelbart, one of the fathers of personal computing, is definitely one of my personal heroes. He dedicated his life to the pursuit of developing technology to augment human intellect. He didn't see this as a technological problem though, but as a human problem, with technology falling out as part of a solution. His methods and models are brilliant and I rely heavily on them when working with clients.

- The Mother of All Demos

- Improving Our Ability to Improve: A Call for Investment in a New Future

- Augmenting Human Intellect: A Conceptual Framework

When thinking about the future, you can't do it better than Alan Kay. Perhaps he is one of the best known computing visionaries still around today and his reasoning is spot on when it comes to invention, innovation and strategies how to succeed in a digital world.

Neil Postman is one of my favourite media critics and funnily enough was never categorically against technology. But he warned us vigorously to not be suspicious of technology. His predictions, cautions and propositions on how we become used by technology rather than make use of technology have been spot on so far – unfortunately.

- The Surrender of Culture to Technology

- Five Things We Need to Know About Technological Change

- Technopoly

There's often a thin line between madness and genius and Ted Nelson walks that line confidently. The original inventor of hypertext, internet pioneer and visionary saw the need for interconnected documents decades before the World Wide Web was born. And even now his vision is far from being complete – luckily the size of his ambition hasn't changed.

Bret Victor is one of the thinkers I respect most in our industry. His talks and essay have been highly influential to me. In the spirit of Doug Engelbart, Bret thinks deeply about how to create a new dynamic medium that shapes computing for the 21st century and allows us to see, understand and solve complex problems.

- The Future of Programming

- Media for Thinking the Unthinkable

- A Brief Rant on the Future of Interaction Design

It's rare that I don't fall in love with talks by Maciej Cegłowski, talking mostly on the excesses and impacts of technology on society. His style of storytelling along with ingenious insights is just amazing.

- Superintelligence: The Idea That Eats Smart People

- The Website Obesity Crisis

- Build a Better Monster: Morality, Machine Learning, and Mass Surveillance

Audrey Watters is mostly known for her prolific work on education technology issues and tech in general. The witty way she interrogates the stories about technology we tell ourselves – or have been told to us – is full of deep insight.

- The Best Way to Predict the Future is to Issue a Press Release

- Driverless Ed-Tech: The History of the Future of Automation in Education

Finally for those of you who can't get enough, I had a hard time leaving these tidbits out – you're

welcome: When We Build by Wilson Miner, Stephen

Fry's The future of humanity and technology,

Memento Product Mori: Of ethics in digital product design by Sebastian Deterding,

The Web's Grain by Frank Chimero and last but not least

John Cleese on Creativity In Management.

Thanks a lot for your continued support and feedback over the last years, it is heavily appreciated. You're very welcome to subscribe to this series and get it directly in your inbox along with some cool stuff that you won't find anywhere else on the site.

Lastly, if you have any feedback, critique, tips, ideas, comments or free bags of money, I'd be very glad to hear from you. Thank you.

summing up 99

summing up is a recurring series on topics & insights that compose a large part of my thinking and work. drop your email in the box below to get it – and much more – straight in your inbox.

Measuring Collective IQ, by Doug Engelbart

We have the opportunity to change our thinking and basic assumptions about the development of computing technologies. The emphasis on enhancing security and protecting turf often impedes our ability to solve problems collectively. If we can re-examine those assumptions and chart a different course, we can harness all the wonderful capability of the systems that we have today.

People often ask me how I would improve the current systems, but my response is that we first need to look at our underlying paradigms—because we need to co-evolve the new systems, and that requires new ways of thinking. It’s not just a matter of “doing things differently,” but thinking differently about how to approach the complexity of problem-solving today.

in a world where we've grown multiple orders of magnitude in our computing capacity, where we spend millions of dollars on newer, faster tools and technology, we put little emphasis on how we can augment human thinking and problem solving. and as doug says, it is not about thinking differently about these problems, it is thinking differently about our ability to to solve these problems.

The Seven Deadly Sins of Predicting the Future of AI, by Rodney Brooks

Suppose a person tells us that a particular photo is of people playing Frisbee in the park, then we naturally assume that they can answer questions like “what is the shape of a Frisbee?”, “roughly how far can a person throw a Frisbee?”, “can a person eat a Frisbee?”, “roughly how many people play Frisbee at once?”, “can a 3 month old person play Frisbee?”, “is today’s weather suitable for playing Frisbee?”. Today’s image labelling systems that routinely give correct labels, like “people playing Frisbee in a park” to online photos, have no chance of answering those questions. Besides the fact that all they can do is label more images and can not answer questions at all, they have no idea what a person is, that parks are usually outside, that people have ages, that weather is anything more than how it makes a photo look, etc., etc.

Here is what goes wrong. People hear that some robot or some AI system has performed some task. They then take the generalization from that performance to a general competence that a person performing that same task could be expected to have. And they apply that generalization to the robot or AI system.

Today’s robots and AI systems are incredibly narrow in what they can do. Human style generalizations just do not apply. People who do make these generalizations get things very, very wrong.

we are surrounded my hysteria about artificial intelligence, mistaken extrapolations, limited imagination any many more mistakes that distract us from thinking productively about the future. whether or not ai succeeds in the long term, it will nevertheless be developed and used with uncompromising efforts – regardless of any consequences.

Using Artificial Intelligence to Augment Human Intelligence, by Shan Carter and Michael Nielsen

Unfortunately, many in the AI community greatly underestimate the depth of interface design, often regarding it as a simple problem, mostly about making things pretty or easy-to-use. In this view, interface design is a problem to be handed off to others, while the hard work is to train some machine learning system.

This view is incorrect. At its deepest, interface design means developing the fundamental primitives human beings think and create with. This is a problem whose intellectual genesis goes back to the inventors of the alphabet, of cartography, and of musical notation, as well as modern giants such as Descartes, Playfair, Feynman, Engelbart, and Kay. It is one of the hardest, most important and most fundamental problems humanity grapples with.

the speed, performance or productivity of computers are mostly red herrings. the main problem is how we can leverage the computer as a tool. in different words, how can we use the computer to augment ourselves to do things that were previously impossible?

summing up 98

summing up is a recurring series on topics & insights that compose a large part of my thinking and work. drop your email in the box below to get it – and much more – straight in your inbox.

How To Be a Systems Thinker, by Mary Catherine Bateson

There has been so much excitement and sense of discovery around the digital revolution that we’re at a moment where we overestimate what can be done with AI, certainly as it stands at the moment.

One of the most essential elements of human wisdom at its best is humility, knowing that you don’t know everything. There’s a sense in which we haven’t learned how to build humility into our interactions with our devices. The computer doesn’t know what it doesn’t know, and it's willing to make projections when it hasn’t been provided with everything that would be relevant to those projections.

after all, computers are still tools we should take advantage of, to augment ourselves to do things that were previously impossible, to help us make our lives better. but all too often it seems to me that everyone is used by computers, for purposes that seem to know no boundaries.

Fantasies of the Future: Design in a World Being Eaten by Software, by Paul Robert Lloyd

Drawing inspiration from architectural practice, its successes and failures, I question the role of design in a world being eaten by software. When the prevailing technocratic culture permits the creation of products that undermine and exploit users, who will protect citizens within the digital spaces they now inhabit?

We need to take it upon ourselves to be more critical and introspective. This shouldn’t be too hard. After all, design is all about questioning what already exists and asking how it could be improved for the better.

Perhaps we need a new set of motivational posters. Rather than move fast and break things, perhaps slow down and ask more questions.

we need a more thoughtful, questioning approach to digital. how does a single technology, a tool or a digital channels help us improve? the answer is out there somewhere, but he have to stop ourselves more often to ask "why?".

Storytime, by Ron Gilbert

The grand struggle of creativity can often be about making yourself stupid again. It's like turning yourself into a child who views the world with wonderment and excitement.

Creating something meaningful isn't easy, it's hard. But that's why we should do it. If you ever find yourself being comfortable on what you're making or creating, then you need to push yourself. Push yourself out of your comfort zone and push yourself to the point of failure and then beyond.

When I was a kid, we would go skiing a lot. At the end of the day all the skiers were coming to the lodge and I used to think it was the bad skiers that were covered in snow and it was the good skiers that were all cleaned, with no snow on them. But turns out to be the exact opposite is true: it was the good skiers that were covered in snow from pushing themselves, pushing themselves beyond the limits and into their breaking points, getting better and then pushing themselves harder. Creativity is the same thing. It's like you push hard, you push until you're scared and afraid, you push until you break, you push until you fall and then you get up and you do it again. Creativity is really a journey. It's a wonderful journey the you part you start out as one person and that you end as another.

it's always a lot harder to create something meaningful than just creating something. but that's exactly the reason why you should do it. a great talk by one of my favourite game designers.

summing up 97

summing up is a recurring series on topics & insights that compose a large part of my thinking and work. drop your email in the box below to get it – and much more – straight in your inbox.

Everything Easy is Hard Again, by Frank Chimero

So much of how we build websites and software comes down to how we think. The churn of tools, methods, and abstractions also signify the replacement of ideology. A person must usually think in a way similar to the people who created the tools to successfully use them. It’s not as simple as putting down a screwdriver and picking up a wrench. A person needs to revise their whole frame of thinking; they must change their mind.

The new methods were invented to manage a level of complexity that is completely foreign to me and my work. It was easy to back away from most of this new stuff when I realized I have alternate ways of managing complexity. Instead of changing my tools or workflow, I change my design. It’s like designing a house so it’s easy to build, instead of setting up cranes typically used for skyscrapers. Beyond that, fancy implementation has never moved the needle much for my clients.

So, I thought it would be useful remind everyone that the easiest and cheapest strategy for dealing with complexity is not to invent something to manage it, but to avoid the complexity altogether with a more clever plan.

a fancy implementation has never moved the needle much for my clients either. what has though is to build relationships and let technology support this process. we are an increasingly digital society, yes, but that doesn't mean we have to let technology take over.

How To Become A Centaur, by Nicky Case

Human nature, for better or worse, doesn’t change much from millennia to millennia. If you want to see the strengths that are unique and universal to all humans, don’t look at the world-famous award-winners — look at children. Children, even at a young age, are already proficient at: intuition, analogy, creativity, empathy, social skills. Some may scoff at these for being “soft skills”, but the fact that we can make an AI that plays chess but not hold a normal five-minute conversation, is proof that these skills only seem “soft” to us because evolution’s already put in the 3.5 billion years of hard work for us.

So, if there’s just one idea you take away from this entire essay, let it be Mother Nature’s most under-appreciated trick: symbiosis.

Symbiosis shows us you can have fruitful collaborations even if you have different skills, or different goals, or are even different species. Symbiosis shows us that the world often isn’t zero-sum — it doesn’t have to be humans versus AI, or humans versus centaurs, or humans versus other humans. Symbiosis is two individuals succeeding together not despite, but because of, their differences. Symbiosis is the “+”.

zero sum games most often win our attention, but the vast majority of our interactions are positive sum: when you share, when you buy, when you learn, when you talk. similarly with technology and computers: we can only improve if we use technology to augment ourselves in order to allow for new, previously-impossible ways of thinking, of living, of being.

How To Save Innovation From Itself, by Alf Rehn

At the same time as we so happily create everything from artificial intelligences to putting "smart" into absolutely bloody everything – At the same time, there are still so many actual, real problems unsolved. I do not need a single more problem solved, every one of my actual problems have been solved. There is not a single thing I could even dream of wanting that hasn't been already created. Yes, I can upgrade. I can buy a slightly cooler car. I can buy slightly better clothes. I can buy slightly faster phones. But frankly I am just consuming myself into the grave, because I have an empty life.

In all this innovation bullshit, what has happened, is that rather than look at true, meaningful change, we have turned innovation into one more bullshit phrase, into one more management buzzword. Do we actually have discussions about whether we're doing meaningful work or just work that happens to be paid at the moment. We need regardless of what company we work in, we need to look at the products we create, the things we create, and say "yes, this can matter". But it can not just matter to me, it needs to matter to someone else as well.

We have blind spots, we all have them. We all have our biases. It is acceptable perchance to have a bias as an individual. But when the entire community or an entire nation has a bias, this says we have not gone far enough.

we seem to spend so much talent, research, time, energy and money to create things that nobody needs, just because we feel we have to innovate somehow. and the problem isn't how to innovate or the innovation per se, but how to get society to adopt the good ideas that already exist.

summing up 96

summing up is a recurring series on topics & insights that compose a large part of my thinking and work. drop your email in the box below to get it – and much more – straight in your inbox.

Inadvertent Algorithmic Cruelty, by Eric Meyer

Algorithms are essentially thoughtless. They model certain decision flows, but once you run them, no more thought occurs. To call a person “thoughtless” is usually considered a slight, or an outright insult; and yet, we unleash so many literally thoughtless processes on our users, on our lives, on ourselves.

this hits home on so many levels. we throw technology at people, hoping something will stick. instead, we should use the computer and algorithms to augment ourselves to do things that were previously impossible, to help us make our lives better. that is the sweet spot of our technology.

10 Timeframes, by Paul Ford

The time you spend is not your own. You are, as a class of human beings, responsible for more pure raw time, broken into more units, than almost anyone else. You are about to spend whole decades, whole centuries, of cumulative moments, of other people’s time. People using your systems, playing with your toys, fiddling with your abstractions. And I want you to ask yourself when you make things, when you prototype interactions, am I thinking about my own clock, or the user’s? Am I going to help someone make order in his or her life?

If we are going to ask people, in the form of our products, in the form of the things we make, to spend their heartbeats—if we are going to ask them to spend their heartbeats on us, on our ideas, how can we be sure, far more sure than we are now, that they spend those heartbeats wisely?

our technological capability changes much faster than our culture. we first create our technologies and then they change our society and culture. therefore we have a huge responsibility to look at things from the other person's point of view – and to do what's best for them. in other words, be considerate.

Finding the Exhaust Ports, by Jon Gold

Lamenting about the tech industry’s ills when self-identifying as a technologist is a precarious construction. I care so deeply about using the personal computer for liberation & augmentation. I’m so, so burned out by 95% of the work happening in the tech industry. Silicon Valley mythologizes newness, without stopping to ask “why?”. I’m still in love with technology, but increasingly with nuance into that which is for us, and that which productizes us.

Perhaps when Bush prophesied lightning-quick knowledge retrieval, he didn’t intend for that knowledge to be footnoted with Outbrain adverts. Licklider’s man-computer symbiosis would have been frustrated had it been crop-dusted with notifications. Ted Nelson imagined many wonderfully weird futures for the personal computer, but I don’t think gamifying meditation apps was one of them.

every day we make big fuss about a seemingly new hype (ai, blockchain, vr, iot, cloud, ... what next?). as neil postman and others have cautioned us, technologies tend to become mythic. that is, perceived as if they were god-given, part of the natural order of things, gifts of nature, and not as artifacts produced in a specific political and historical context. and by that we completely fail to recognize how we can use technology to augment ourselves to do things that were previously impossible, to help us make our lives better.

summing up 95

summing up is a recurring series on topics & insights that compose a large part of my thinking and work. drop your email in the box below to get it – and much more – straight in your inbox.

Legends of the Ancient Web, by Maciej Cegłowski

Radio brought music into hospitals and nursing homes, it eased the profound isolation of rural life, it let people hear directly from their elected representatives. It brought laugher and entertainment into every parlor, saved lives at sea, gave people weather forecasts for the first time.

But radio waves are just oscillating electromagnetic fields. They really don't care how we use them. All they want is to go places at the speed of light. It is hard to accept that good people, working on technology that benefits so many, with nothing but good intentions, could end up building a powerful tool for the wicked. But we can't afford to re-learn this lesson every time.

Technology interacts with human nature in complicated ways, and part of human nature is to seek power over others, and manipulate them. Technology concentrates power. We have to assume the new technologies we invent will concentrate power, too. There is always a gap between mass adoption and the first skillful political use of a medium. With the Internet, we are crossing that gap right now.

only those who know nothing about technological history believe that technology is entirely neutral. it has always a bias towards being used in certain ways and not others. a great comparison to what we're facing now with the internet.

Silicon Valley Is Turning Into Its Own Worst Fear, by Ted Chiang

In psychology, the term “insight” is used to describe a recognition of one’s own condition, such as when a person with mental illness is aware of their illness. More broadly, it describes the ability to recognize patterns in one’s own behavior. It’s an example of metacognition, or thinking about one’s own thinking, and it’s something most humans are capable of but animals are not. And I believe the best test of whether an AI is really engaging in human-level cognition would be for it to demonstrate insight of this kind.

I used to find it odd that these hypothetical AIs were supposed to be smart enough to solve problems that no human could, yet they were incapable of doing something most every adult has done: taking a step back and asking whether their current course of action is really a good idea. Then I realized that we are already surrounded by machines that demonstrate a complete lack of insight, we just call them corporations. Corporations don’t operate autonomously, of course, and the humans in charge of them are presumably capable of insight, but capitalism doesn’t reward them for using it. On the contrary, capitalism actively erodes this capacity in people by demanding that they replace their own judgment of what “good” means with “whatever the market decides.”

the problem is this: if you're never exposed to new ideas and contexts, if you grow up only being shown one way of thinking about businesses & technology and being told that there are no other ways to think about this, you grow up thinking you know what we're doing.

The resource leak bug of our civilization, by Ville-Matias Heikkilä

When people try to explain the wastefulness of today's computing, they commonly offer something I call "tradeoff hypothesis". According to this hypothesis, the wastefulness of software would be compensated by flexibility, reliability, maintability, and perhaps most importantly, cheap programming work.

I used to believe in the tradeoff hypothesis as well. However, during recent years, I have become increasingly convinced that the portion of true tradeoff is quite marginal. An ever-increasing portion of the waste comes from abstraction clutter that serves no purpose in final runtime code. Most of this clutter could be eliminated with more thoughtful tools and methods without any sacrifices.

we too often seem to adjust to the limitations of technology, instead of creating solutions for a problem with the help of technology.

summing up 94

summing up is a recurring series on topics & insights that compose a large part of my thinking and work. drop your email in the box below to get it – and much more – straight in your inbox.

Some excerpts from recent Alan Kay emails

Socrates didn't charge for "education" because when you are in business, the "customer starts to become right". Whereas in education, the customer is generally "not right". Marketeers are catering to what people want, educators are trying to deal with what they think people need (and this is often not at all what they want).

Another perspective is to note that one of the human genetic "built-ins" is "hunting and gathering" – this requires resources to "be around", and is essentially incremental in nature. It is not too much of an exaggeration to point out that most businesses are very like hunting-and-gathering processes, and think of their surrounds as resources put there by god or nature for them. Most don't think of the resources in our centuries as actually part of a human-made garden via inventions and cooperation, and that the garden has to be maintained and renewed.

these thoughts are a pure gold mine. a fundamental problem for most businesses is that one cannot innovate under business objectives and one cannot accomplish business objectives under innovation. ideally, you need both, but not at the same time.

How a handful of tech companies control billions of minds every day, by Tristan Harris

When we talk about technology, we tend to talk about it as this blue sky opportunity. It could go any direction. And I want to get serious for a moment and tell you why it's going in a very specific direction. Because it's not evolving randomly. There's a hidden goal driving the direction of all of the technology we make, and that goal is the race for our attention. Because every new site or app has to compete for one thing, which is our attention, and there's only so much of it. And the best way to get people's attention is to know how someone's mind works.

A simple example is YouTube. YouTube wants to maximize how much time you spend. And so what do they do? They autoplay the next video. And let's say that works really well. They're getting a little bit more of people's time. Well, if you're Netflix, you look at that and say, well, that's shrinking my market share, so I'm going to autoplay the next episode. But then if you're Facebook, you say, that's shrinking all of my market share, so now I have to autoplay all the videos in the newsfeed before waiting for you to click play. So the internet is not evolving at random. The reason it feels like it's sucking us in the way it is is because of this race for attention. We know where this is going. Technology is not neutral, and it becomes this race to the bottom of the brain stem of who can go lower to get it.

we always seem to have the notion that technology is always good. but that is simply not the case. every technology is always both a burden and a blessing. not either or, but this and that.

The world is not a desktop, by Mark Weiser

The idea, as near as I can tell, is that the ideal computer should be like a human being, only more obedient. Anything so insidiously appealing should immediately give pause. Why should a computer be anything like a human being? Are airplanes like birds, typewriters like pens, alphabets like mouths, cars like horses? Are human interactions so free of trouble, misunderstanding, and ambiguity that they represent a desirable computer interface goal? Further, it takes a lot of time and attention to build and maintain a smoothly running team of people, even a pair of people. A computer I need to talk to, give commands to, or have a relationship with (much less be intimate with), is a computer that is too much the center of attention.

in a world where computers increasingly become human, they inevitably will become the center of attention. the exact opposite of what they should be: invisible and helping to focus our attention to ourselves and the people we live with.

summing up 93

summing up is a recurring series on topics & insights that compose a large part of my thinking and work. drop your email in the box below to get it – and much more – straight in your inbox.

The future of humanity and technology, by Stephen Fry

Above all, be prepared for the bullshit, as AI is lazily and inaccurately claimed by every advertising agency and app developer. Companies will make nonsensical claims like "our unique and advanced proprietary AI system will monitor and enhance your sleep" or "let our unique AI engine maximize the value of your stock holdings". Yesterday they would have said "our unique and advanced proprietary algorithms" and the day before that they would have said "our unique and advanced proprietary code". But let's face it, they're almost always talking about the most basic software routines. The letters A and I will become degraded and devalued by overuse in every field in which humans work. Coffee machines, light switches, christmas trees will be marketed as AI proficient, AI savvy or AI enabled. But despite this inevitable opportunistic nonsense, reality will bite.

If we thought the Pandora's jar that ruined the utopian dream of the internet contained nasty creatures, just wait till AI has been overrun by the malicious, the greedy, the stupid and the maniacal. We sleepwalked into the internet age and we're now going to sleepwalk into the age of machine intelligence and biological enhancement. How do we make sense of so much futurology screaming in our ears?